Improving Query Performance (At the database)

April 13, 2011 15 Comments

I recently was assigned to a project at a company with a rather large BOBJ deployment. Their production environments contained well in excess of 100,000 Web Intelligence documents. These documents are running against a rather sizable data warehouse. My job was to reduce the burden on the data warehouse. Tens of thousands of queries were hitting the data warehouse on an average day. If we could reduce the CPU time at the database, we could prolong the eventual expansion of hardware in the data warehouse.

I spent a few weeks examining the environment, the universes, and some of the slower documents. I also spoke with some of the bright people there, and eventually came up with some ideas for improving query performance. Note that my focus was reducing CPU time on the database. So I wasn’t looking to improve report performance, but some of these ideas will have that effect anyway. There may also be other ways to improve report performance that will have little or no affect on database performance.

Query Stripping

No, I’m not talking about having a bunch of naked queries. I’m talking about stripping out everything that isn’t needed in your queries. Often times, we find that Web Intelligence documents are not developed from scratch. Existing documents are copied, and re-used. Users will take existing reports, copy them, then add what they need to make a new document. The problem is that, while they add new objects and/or queries, they don’t always remove old objects and queries that are not needed for this new document.

The end result is a document that contains far more queries than are needed, as well as more granularity of data than is needed.

Web Intelligence XI3.1 SP3 contains a new feature called Query Stripping. You’ll find it as a check box in t he document properties. When enabled, this feature will automatically remove any queries and/or objects from the SQL sent to the database that do not directly contribute to the report(s) in the document. This is a cool feature. However, it is a feature that is only available when using universes built against OLAP cubes. The vast majority of universes are built against relational databases, and this feature won’t work for those universes. 😦

BI 4.0 doesn’t have this limitation. Query Stripping should be available for all data sources in BI 4.0. So, until you upgrade to BI 4.0, you’ll have to manually strip your queries. This can be tedious, but may be worth the effort. So, since we have to do this manually, let’s look at the things that will get us the biggest bang for our buck.

Remove any unneeded queries. The fewer queries we run, the less burden we place on the database. So, purge and delete any queries that are no longer required for the report(s).

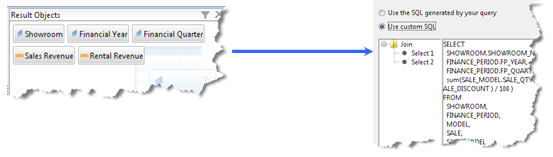

Remove any unneeded measures. As you may know, if a query has measures from multiple tables, Web Intelligence will split them into multiple queries, one for each measure from a different table, before sending the queries to the database. This is to avoid SQL traps that can yield exaggerated results.

Therefore, removing a single measure object from a query can eliminate a query sent to the database, provided that no other measures are coming from the same table. Reducing the number of queries sent to the database not only reduces the burden on the database, but it improves overall query time in the document.

Schedule Purging

Another problem that comes with time: documents that are scheduled to run on a scheduled basis, often continue running long after they are no longer needed. Part of the cause of this problem is that, by default, scheduled documents will run for ten years before they expire. I can’t imagine very many documents remaining useful for that long.

You can use Auditor to find documents that are no longer being viewed. Scheduled documents that are no longer used are still running their queries, and, therefore, are adding overhead to the database. Removing these schedules will free up space in the File Store, as well as free up resources on the database server.

While reviewing scheduled documents, also take a look at Publications. These may be harder to to track down, as Auditor won’t tell you if someone opened the email they got with the document attached. In this case, you will have to survey the recipients and ask if they still use the documents they receive. If no one responds telling you that they use it, feel free to remove the schedule. You can always add it back later if someone complains.

Index Awareness

This is a feature of the universe, and can be used to speed up queries significantly, depending on your database, and the types of queries being written. It can speed up queries in several ways:

- It can remove joins from the SQL

- It can remove tables from the SQL

- It can search indexed, rather than non-indexed, columns

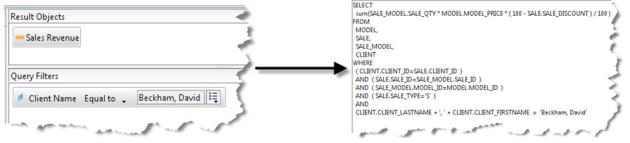

How does it work? Well, if a filter is added to a query that searches for a value in a non-indexed column, Index Awareness will edit the SQL to search for a matching value in an indexed column. In the example below, we’re searching for sales revenue for a specific client. Without Index Awareness, the SQL matches what is in the query panel.

First of all, notice that Client Name is a transformation. It’s the concatenation of two columns in the database. Searching a transformation is never going to be fast, as it cannot be indexed in the database. Also, notice that the CLIENT table is in the SQL, and it is joined to the SALE table. With Index Awareness enabled, the same query looks like this.

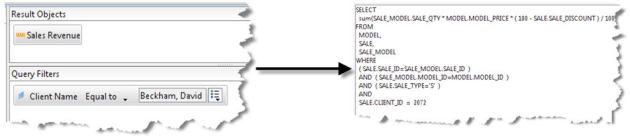

Instead of searching a Client Name, the SQL is now searching the Client ID, which is an indexed column. In addition, since the Client ID exists in the SALE table, the SQL no longer needs the CLIENT table at all. It can remove the CLIENT table, as well as the join to the CLIENT table. This query will run much faster.

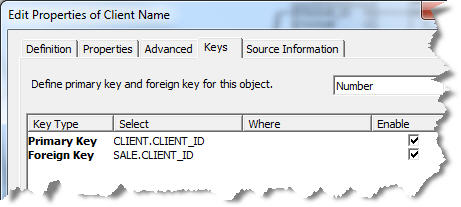

Index Awareness is enabled in the object, in the universe. In this case, Client Name is defined with Index Awareness. On the Keys tab of an object, a Primary Key can be defined, as well as one or more Foreign Keys.

The Key values are then stored in the LOV for the object. When a user selects a value from the LOV, the SQL will switch the value to the indexed column. Of course, if the user does not select from the LOV, but types in the value instead, Index Awareness is not used. So you will need to train your users to select from the LOV.

Index awareness works best when used on a transactional database, or a snowflake schema. Star schemas are usually already optimized for queries, so you may not get as much improvement with a star schema.

Pushing Transformations

Complex SQL transformations are often seen in universe objects, and are often an easy way to get the data to appear the way we need it for reporting. In the previous example, Client Name was a simple transformation. Here’s an example of a complex transformation:

Query stripping will not help if your customer have tons of pixel perfect reports.

We migrated from oracle 10 to Sybase IQ 15.2 and the overall query response time was reduced ~30-50%

Srulis, I’m curious about your statement, “Query stripping will not help if your customer have tons of pixel perfect reports.” The database doesn’t care, or even know, that your reports are pixel perfect, or highly formatted. Reducing the load on the database is irrelevant to the formatting of the report. So, can please explain your statement?

So, as you well might now Query stripping is an excellent option which is being ‘borrowed’ from Bex Analyzer and it offers possibility to reduce the MDX syntax which is sent to Olap dataource (MS Analysis service, Essbase, BW). If your customer is looking for pixel pefect reporting then Query stripping is useless, because you select only those objects that will be used in a report, it’s worth to use it only in ad-hoc reporting. That’s that

I see what you’re saying, but query stripping can be useful regardless of the report formatting. If you have objects, and/or queries in the document that are not contributing to the report(s), they should be stripped out, unless needed for drilling. Having a pixel perfect report doesn’t eliminate unused objects from the SQL.

My customer just moved from XI 3.1 SP1 to SP3 so I just used query stripping for the first time. Looking forward to hearing more about naked queries at your “Web Intelligence Gone Wild” presentation at ASUG SBOUC this fall.

Here is what I did on the DB2 database.

Partitioning the database so the queries will scan only the necessary partitions rather than doing a full table scan.

In the Cognos report 🙂 we have eliminated unwanted query items (which you have covered as the second point) which significantly resulted in the faster queries.

In my BO reports, I have used ‘query on query’ feature. In that way by reports ran faster rather than struggling to pull everything into the report & then filtering the unwanted records.

More to come. 🙂

Hi Jansi,

What is ‘query on quer’y feature.

Yes, that is correct.

Maybe Jansi meant that he uses drill-through reports to see more detailed information?

Jansi was talking about the Query on Query feature. She would build one query, then use the results from that query as a condition on the second query. It’s similar to a subquery, but can be used with different data sources.

Be a friend to your DBA. 🙂 The ETL & reporting team needs to work closely with a DBAs’ team dedicated to the project. Basement (data modeling) has to be the first place where all the performance oriented measures are taken. (indexes, keys declaration, etc…)

My main complaint about index awareness is the “perfect storm” that has to occur before the feature is triggered. The user has to pick from a list of values in order to make it work. If you type in the name rather than pick from the list, then Web Intelligence has no opportunity to retrieve the ID value associated with the name.

I completely agree, Dave. It requires user training, and there’s still no guarantee that the users will do it right. There’s an opportunity for failure that can;t be prevented.

Hi Micheal,

In your index Awareness section, if you would have not included foreign key SALE.CLIENT_ID in object Ket tab ,then the CLINET table would have been considered in query and instead of taking client id from SALES table it would have taken it from CLINET table. Is it?

Thank you so much for the explanation. I have been trying to implement query stripping in IDT 4.1 and Web Intelligence 4.2 SP3. I found another good article Query Stripping in WebI which may help some of your readers. They’ve explained how to implement them at Universe and Report level.